An Introduction to High Availability Architecture

In the real world, there can be situations when a dip in performance of your servers might occur from events ranging from a sudden spike in traffic can lead to a sudden power outage. It can be much worse and your servers can be crippled- irrespective of whether your applications are hosted in the cloud or a physical machine. Such situations are unavoidable. However, rather than hoping that it doesn’t occur, what you should actually do is to gear up so that your systems don’t encounter failure.

The answer to the problem is the use of High Availability (HA) configuration or architecture. High availability architecture is an approach of defining the components, modules or implementation of services of a system which ensures optimal operational performance, even at times of high loads. Although there are no fixed rules of implementing HA systems, there are generally a few good practices that one must follow so that you gain the most out of the least resources.

Why do you need it?

Let us define downtime before we move further. Downtime is the period of time when your system (or network) is not available for use, or is unresponsive. Downtime can cause a company huge losses, as all their services are put on hold when their systems are down. In August 2013, Amazon went down for 15 minutes (both web and mobile services), and ended up losing over $66000 per minute. Those are huge numbers, even for a company of the size of Amazon.

There are two types of downtimes- scheduled and unscheduled. A scheduled downtime is a result of maintenance, which is unavoidable. This includes applying patches, updating softwares or even changes in the database schema. An unscheduled downtime is, however, caused by some unforeseen event, like hardware or software failure. This can happen due to power outages or failure of a component. Scheduled downtimes are generally excluded from performance calculations.

The prime objective of implementing High Availability architecture is to make sure you system or application is configured to handle different loads and different failures with minimal or no downtime. The are multiple components that help you in achieving this, and we will be discussing them briefly.

How is availability measured?

Organizations who plan to fully utilize a cloud infrastructure must also be capable of meeting demands for 24/7 availability. Availability can be measured as the percentage of time that systems are available.

x = (n - y) * 100/n

Where n is the total number of minutes in a calendar month and y is the total number of minutes that service is unavailable in the given calendar month. High availability simply refers to a component or system that is continuously operational for a desirably long period of time. The widely-held but almost impossible to achieve standard of availability for a product or system is referred to as ‘five 9s’ (99.999 percent) availability. High availability is a requirement for any enterprise that hopes to protect their business against the risks brought about by a system outage. These risks, can lead to millions of dollars in revenue loss.

Is it really worth the money?

The fact that going for high availability architecture gives you higher performance is all right, but it comes at a big cost too. You must ask yourself if you think the decision is justified from the point of view of finance.

A decision must be made on whether the extra uptime is truly worth the amount of money that has to go into it. You must ask yourself how damaging potential downtimes can be for your company and how important your services are in running your business.

How do we achieve it?

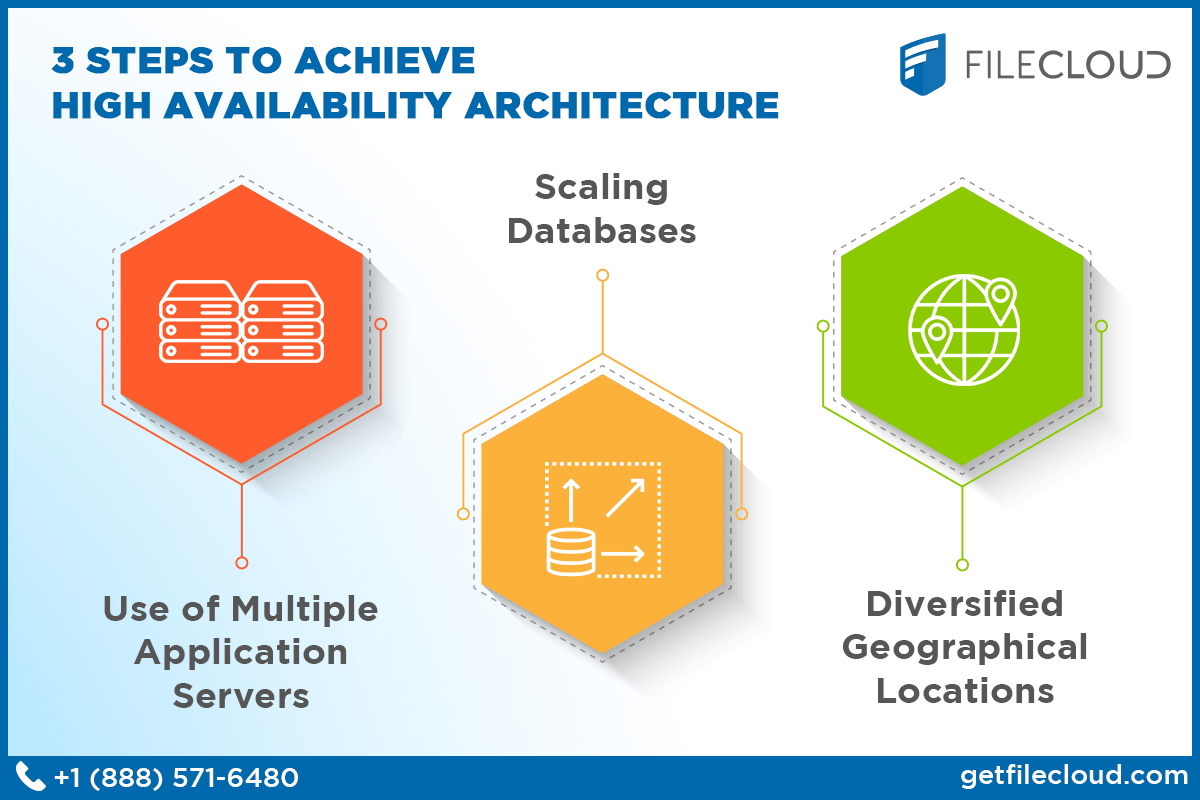

3 Steps to Achieve High Availability Architecture

3 Steps to Achieve High Availability Architecture

Now that you have decided to go with it, let’s discuss the ways to implement it. Non-intuitively, adding more components to a system doesn’t help in making it more stable and achieve high availability. It can actually lead to the opposite as more components increases the probability of failures. Modern designs allow for distribution of the workloads across multiple instances such as a network or a cluster, which helps in optimizing resource use, maximizing output, minimizing response times and avoiding overburden of any system in the process known as load balancing. It also involves switching to a standby resource like a server, component or network in the case of failure of an active one, known as Failover systems.

1. Use of Multiple Application Servers:

Imagine that you have a single server to render your services and a sudden spike in traffic leads to its failure (or crashes it). In such a situation, until your server is restarted, no more requests can be served, which leads to a downtime.

The obvious solution here is to deploy your application over multiple servers. You need to distribute the load among all these, so that none of them are overburdened and the output is optimum. You can also deploy parts of your application on different servers. For instance, there could be a separate server for handling mails or a separate one for processing static files like images (like a Content Delivery Network).

2. Scaling Databases:

Databases are the most popular and perhaps one of the most conceptually simple ways to save user data. One must remember that databases are equally important to your services as your application servers. Databases run on separate servers (like the Amazon RDS) and are prone to crashes as well. What’s worse is that databases crashes can lead to a loss of user data, which can prove to be costly.

Redundancy is a process which creates systems with high levels of availability by achieving failure detectability and avoiding common cause failures. This can be achieved by maintaining slaves, which can step in if the main server crashes. Another interesting concept of scaling databases is sharding. A shard is a horizontal partition in a database, where rows of the same table which is then run on a separate server.

3. Diversified Geographical Locations:

Scaling up your applications and then your databases is a really big step ahead, but what if all the servers are at the same physical location and something terrible like a natural disaster affects the data center at which your servers are located? This can lead to potentially huge downtimes.

It is, therefore, imperative that you keep your servers in different locations. Most modern web services allow you to select the geographical location of your servers. You should choose wisely to make sure your servers are distributed all over the world and not localized in an area.

Within this post, I have tried to touch upon the basic ideas that form the idea of high availability architecture. In the final analysis, it is evident that no single system can solve all the problems. Hence, you need to assess your situation carefully and decide on what options suit them best. Here’s hoping that this introduced you to the world of high availability architecture and helped you decide how to go about achieving this for yourself.

What are the best practices?

Best Practices For High Availability

Best Practices For High Availability

In order to curb system failures and keep both planned and unplanned downtimes at bay, the use of a High Availability (HA) architecture is highly recommended, especially for mission-critical applications. Availability experts insist that for any system to be highly available, its parts should be well designed and rigorously tested. The design and subsequent implementation of a high availability architecture can be difficult given the vast range of software, hardware and deployment options. However, a successful effort typically starts with distinctly defined and comprehensively understood business requirements. The chosen architecture should be able to meet the desired levels of security, scalability, performance and availability.

The only way to guarantee compute environments have a desirable level of operational continuity during production hours is by designing them with high availability. In addition to properly designing the architecture, enterprises can keep crucial applications online by observing the recommended best practices for high availability.

- Data Backups, Recovery and Replication

The hallmark of a good data protection plan that protects against system failure is a sound backup and recovery strategy. Valuable data should never be stored without proper backups, replication or the ability to recreate the data. Every data center should plan for data loss or corruption in advance. Data errors may create customer authentication issues, damage financial accounts and subsequently business community credibility. The recommended strategy for maintaining data integrity is creating a full backup of the primary database then incrementally testing the source server for data corruptions. Creating full backups is at the forefront of recovering from catastrophic system failure.

- Clustering

Even with the highest quality of software engineering, all application services are bound to fail at some point. High availability is all about delivering application services regardless of failures. Clustering can provide instant failover application services in the event of a fault. An application service that is ‘cluster aware’ is capable of calling resources from multiple servers; it falls back to a secondary server if the main server goes offline. A High Availability cluster includes multiple nodes that share information via shared data memory grids. This means that any node can be disconnected or shutdown from the network and the rest of the cluster will continue to operate normally, as long as at least a single node is fully functional. Each node can be upgraded individually and rejoined while the cluster operates. The high cost of purchasing additional hardware to implement a cluster can be mitigated by setting up a virtualized cluster that utilizes the available hardware resources.

- Network Load Balancing

Load balancing is an effective way of increasing the availability of critical web-based applications. When server failure instances are detected, they are seamlessly replaced when the traffic is automatically redistributed to servers that are still running. Not only does load balancing lead to high availability it also facilitates incremental scalability. Network load balancing can be accomplished via either a ‘pull’ or a ‘push’ model. It facilitates higher levels of fault tolerance within service applications.

- Fail Over Solutions

High availability architecture traditionally consists of a set of loosely coupled servers which have failover capabilities. Failover is basically a backup operational mode in which the functions of a system component are assumed by a secondary system in the event that the primary one goes offline, either due to failure or planned down time. A ‘cold failover’ occurs when the secondary server is only started after the primary one has been completely shut down. A ‘hot failover’ occurs when all the servers are running simultaneously, and the load is directed entirely towards a single server at any given time. In both scenarios, tasks are automatically offloaded to a standby system component so that the process remains as seamless as possible to the end user. Failover can be managed via DNS, in an well-controlled environment.

- Geographic redundancy

Geo-redundancy is the only line of defense when it comes to preventing service failure in the face of catastrophic events such as natural disasters that cause system outages. Like in the case of geo-replication, multiple servers are deployed at geographical distinct sites. The locations should be globally distributed and not localized in a specific area. It is crucial to run independent application stacks in each of the locations, so that in case there is a failure in one location, the other can continue running. Ideally, these locations should be completely independent of each other.

- Plan for failure

Despite the fact that applying the best practices for high availability is essentially planning for failure; there are other actions an organization can take to increase their preparedness in the event of a system failure leading to downtime. Organizations should keep failure or resource consumption data that can be used to isolate problems and analyze trends. This data can only be gathered through continuous monitoring of operational workload. A recovery help desk can be put in place to gather problem information, establish problem history, and begin immediate problem resolutions. A recovery plan should not only be well documented but also tested regularly to ensure its practicality when dealing with unplanned interrupts. Staff training on availability engineering will improve their skills in designing, deploying, and maintaining high availability architectures. Security policies should also be put in place to curb incidences of system outages due to security breaches.

Example: FileCloud High Availability Architecture

Following diagram explains how FileCloud servers can be configured for High Availability to improve service reliability and reduce downtime. Click here for more details.

Frequently Asked Questions (FAQs)

What is meant by high availability?

High availability, or HA, is a label applied to systems that can operate continuously and dependably without failing. These systems are extensively tested and have redundant components to ensure high quality operational performance. In short, high availability systems will be available no matter what occurs.

What is the difference between high availability and redundancy?

Redundancy is often a component of high availability, but they have different meanings. High availability means that a system will be available regardless of circumstances, while redundancy in a system means that multiple components can replace one another to keep things running in case something happens.

Why is high availability important?

Customer satisfaction often relies on whether or not customers can access your product or service when they need to and whether or not they can depend on it to work. High availability architecture ensures that your website, application, or server continues to function through different demand loads and failure types.

What is high availability in cloud computing?

You can create high availability in cloud computing by making clusters. When a group of servers work together as a single server to deliver continuous uptime, those servers are called a high availability cluster. If one server fails or is otherwise unavailable, the other servers can step in.

What is AWS high availability & fault tolerance architecture?

AWS has services, like S3, SQS, ELB, and SimpleDB, and infrastructure tools, like EC2 and EBS, to help you create a high availability and fault tolerant system in the cloud. The high-level services are designed to support HA and fault tolerance, while infrastructure tools come with features like snapshots and availability zones.

By Team FileCloud