FileCloud High Availability

FileCloud High Availability Architecture

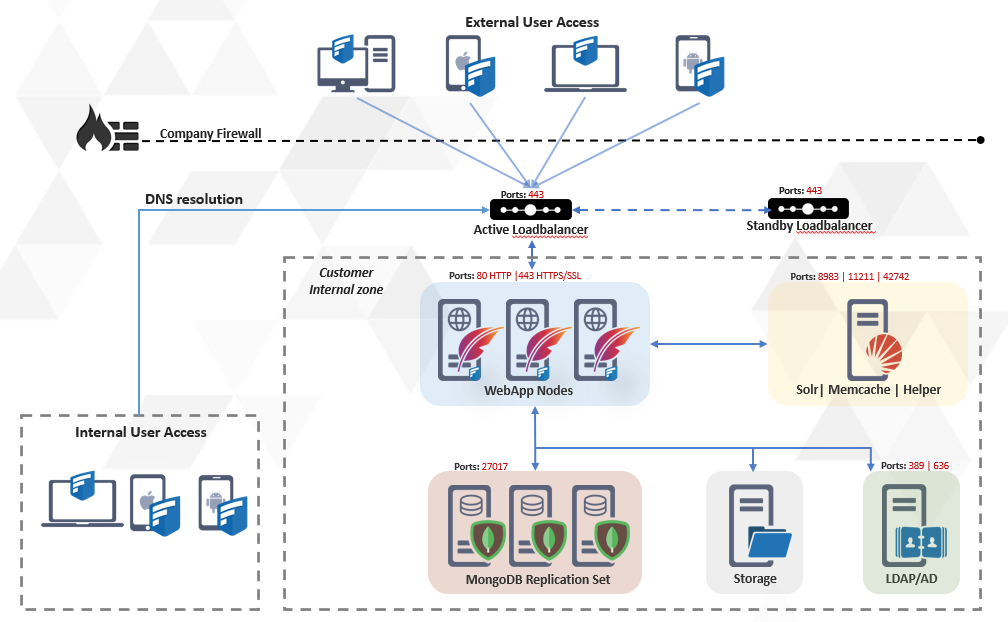

FileCloud servers can be configured for an HA environment to improve service reliability and reduce downtime in your IT environment. FileCloud supports HA in Linux and Windows environments.

Load Balancers

The Load balancer routes traffic to the FileCloud Application nodes. Load balancers (LB) provide advantages to serving requests from your FileCloud servers because they allow you to better control how the traffic is handled in order to provide the best performance.. If one or more App server nodes fail, the load balancer will automatically reroute traffic to other App server nodes.

Typically there is no need to scale the number of load balancers because these servers can handle a very large amount of traffic. However, more than one load balancer can be used to provide additional reliability in the event that a load balancer fails.

In order to protect against load balancer hardware failure, multiple records for the load balancer host name in the DNS service can be used.

The idea here is that different clients will get different ordered lists of IP addresses corresponding to your domain name. This has the effect of distributing requests across the group of IPs in a specific manner. If an IP address does not respond in an appropriate amount of time, the client times out on that request and moves on to the next IP address until the list is exhausted or it finds a connection that's valid.

FileCloud Component: App server node

The FileCloud app server node consists of the Apache webserver as well as the FileCloud Application code to serve the client requests. The FileCloud app server nodes do not contain any application-specific data. The data is retrieved from the MondoDB replica sets. Because of this, the FileCloud app server nodes can be added or removed without disrupting the service.

FileCloud Component: MongoDB Replica set

MongoDB database replica sets provide high availability with automatic failover support. Failover allows a secondary member to become primary in the event of failure to the primary DB node. The minimum number of DB nodes needed for MongoDB is three. All app server nodes connect to the primary node, and in the event of primary node failure, a new primary is elected and all the app server nodes will switch to the new primary.

This document describes the classic 3-tier approach with the load balancer handling the client traffic, application server nodes serving requests, and redundant database servers storing application data.

To set up a High Availability FileCloud system, use the instructions on the following pages in order:

- Installation and Configuration of 3 server MongoDB cluster

- Configure Memcache for HA Environments

- Configure Solr for HA Environments

- Install and Configure FileCloud Web Servers for HA

- Configure Storage for HA

- Set Up Load Balancing

- Installation and Configuration of Standalone Backup Server

- HA System Tests and License Installation

- Configure Cluster Authentication with SSL